Subh Bhattacharya (Lead, Healthcare & Sciences at AMD) explains how Artificial Intelligence (AI) can be utilised in healthcare to save lives and improve the care patients receive.

The growing influence of technology in healthcare services in the past several decades has increased human life expectancy significantly. Now, in the post-pandemic era, artificial intelligence (AI)-based technology solutions are evolving even more rapidly for diagnosis of critical ailments and improved patient care. The medical community’s acceptance of AI in all aspects of healthcare is of paramount importance, especially for early diagnosis, surgeon and specialist support during procedures, and for the rapid development of drugs and vaccines.

The earlier diagnosis of an impending heart attack or diagnosing skin cancer in its very early stage using AI can potentially save many lives. Prior to the pandemic, heart attacks were the leading cause of death worldwide (17.9M annually according to WHO), but were on a steady decline. Regrettably, a new analysis of data from the Cedars Sinai Research Center found that deaths from heart attacks rose significantly during the pandemic. In just the first year of the pandemic, heart attacks rose 14% and steadily increases thereafter across all age groups. For skin cancer, particularly the most aggressive type, melanoma – it can be cured when detected early in most cases. The five-year relative survival rate of melanoma is nearly 99% at detection when the spread is localised, but drops to only 22.5% when it has metastasised to distant organs.

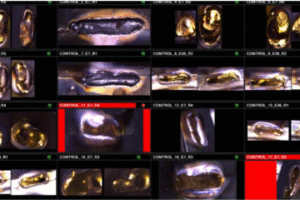

Unfortunately, there is currently no easy way today for medical professionals to predict an impending heart attack. However, by combining trained AI deep learning algorithms from data patterns with advanced echocardiogram and ultrasound programs, for example, has the potential to predict a future heart attack. For diagnosing a skin cancer like melanoma, which is extremely hard to detect, extensive testing diagnostic imaging and more invasive methods like biopsy are required. However, by using video to capture a visibly affected area and running a trained AI deep learning algorithm on a small client device at the edge, has the potential to identify this critical illness earlier and in a more cost-effective and less-invasive manner.

Development and deployment of a deep-learning neural network

A medical AI-based deep learning (DL) method is developed in two stages. The first stage is to create and train a model using available data and images previously collected. Datasets need to be as large as possible (in hundreds of thousands typically) and have to be cleaned, curated and labelled before they can be used for training purposes. When pre-processing the data or images within the dataset, one must also ensure the proper balance in the number between the different classes of data or images to avoid a class imbalance to achieve the most accurate result. This training process requires vast computing resources, and long periods of time and is typically done within a cloud or HPC (high-performance computing) infrastructure using GPUs (graphics processing units).

Inferencing the results

The second stage is to deploy that trained model on any embedded computing device or in an embedded medical system, like an X-ray machine or Ultrasound system, to perform AI inference on the trained model to produce a result on a suspect patient. This device can be any appliance at a clinic, hospital or doctor’s office. The size, quality and classification of the data set used for training determine the final accuracy of the result.

An efficient way to deploy it is on an adaptive hardware platform containing deep learning processing units (DPUs). These are programmable execution units running on embedded devices such as FPGA (Field Programmable Gate Array)-based Adaptive SoCs (System-on-Chip) that combine FPGA and ASIC-like logic with multiple embedded CPU cores. These embedded platforms are ideal for getting inferencing results of a deep learning model on an edge-client end device due to their available high performance, in a power-efficient and cost-effective small footprint.

Key challenges and solution requirements

The first critical step toward the increased use of AI in healthcare is the sophisticated algorithmic development that needs to happen at the research level at universities, independent software companies, and by medical system makers. There is a significant need to fund more university research, training programs and start-up incubators. Structured government programs with targeted funding will encourage further development as well.

Secondly, large, clean, curated and labelled datasets need to be readily available and accessible to the entire research community. This is a significant roadblock today due to HIPAA (Health Insurance Portability and Accountability Act, 1996) in the US and other patient privacy-related regulations around the world. As mentioned earlier, the quality of training a deep learning (DL) model improves proportionally with the size and the quality of the dataset. Today, many medical equipment makers have been developing artificial datasets, which is not practical as it is expensive and time-consuming.

Thirdly, from the hardware and platform perspective, a vast amount of resources are required to speed up the training process, as complex models like the ones mentioned above may need a long time to train.

Lastly, for delivering accurate results fast, a complex and adaptive computing platform is required. This is a combination of hardware, plus software, approach to partition the problems efficiently. A typical medical device should be able to capture, process, analyze, evaluate and display data and images in real-time; all while still able to perform AI algorithmic processing locally.

This is a major challenge in terms of data throughput, low latency deterministic processing, and rapid bring-up of data and images.

For any medical devices, large and small, power dissipation and heat need to be reduced for patient comfort and for energy star compliance. For all handhelds and portables, the challenge is not to overheat in the operator’s hand and also to conserve battery life. System-on-modules, or “SOM” devices like the AMD Kria family can be used just for this purpose. With these types of adaptive embedded FPGA-based SoC solutions, the desired results can be achieved with very low latency, high determinism and extreme accuracy.

It is not hard to imagine a world anymore where heart disease, strokes and cancers can be diagnosed early enough to save significantly more lives. With the industry, government and academia addressing the above-mentioned challenges together, continued development in medical AI and medical imaging combined with continued innovations in adaptive computing devices like FPGAs and embedded SoCs will save many additional lives every day through AI-based earlier disease detection and diagnosis.