As humanity’s ambitions in space continue to grow, the demand for advanced technologies that enable autonomous operations, precise navigation, and efficient maintenance has become paramount. At the heart of these capabilities lies machine vision, a fusion of sophisticated imaging hardware and artificial intelligence (AI) that empowers spacecraft to perceive, interpret, and interact with their environments. From exploring the Martian surface to servicing satellites in orbit and removing hazardous debris, machine vision is revolutionizing how we operate in space.

Editor in Chief

Navigating the Martian Terrain with Autonomy

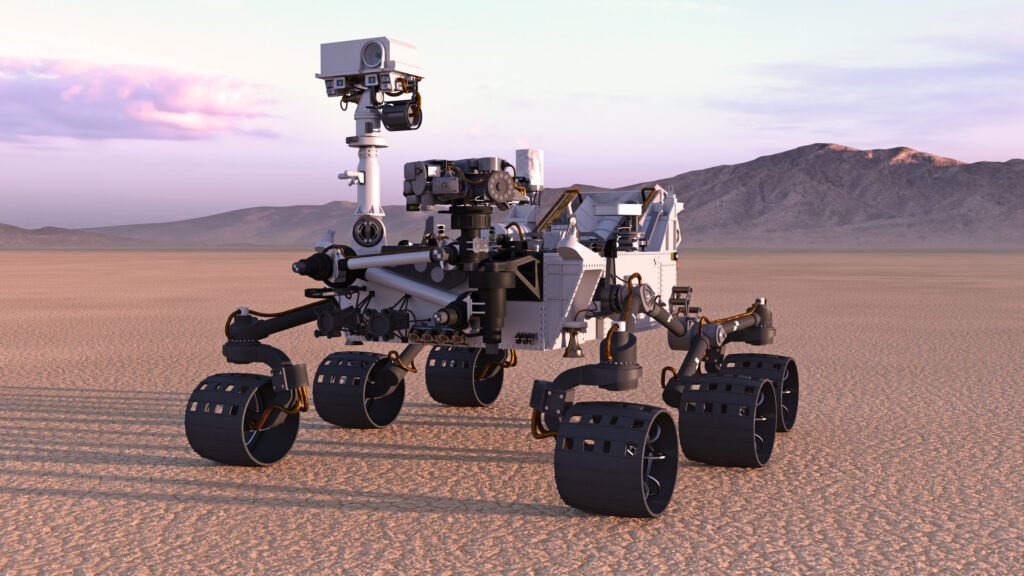

Mars has long been a focal point for robotic exploration, with missions like NASA’s Spirit and Opportunity rovers paving the way for more advanced successors. These early rovers relied heavily on pre-programmed instructions and limited autonomy, often requiring significant human oversight. However, the introduction of machine vision technologies has dramatically enhanced the capabilities of newer missions.

NASA’s Perseverance rover is a prime example. Equipped with multiple stereo and navigation cameras, paired with machine learning algorithms, the rover can assess terrain, identify obstacles, and make real-time navigation decisions. These vision-based systems enable the rover to traverse the Martian surface with limited need for commands from Earth. A necessity given the planet’s average 12-minute communication delay. Machine vision reduces risk and expands the scientific return of the mission by allowing the rover to focus on areas of high geological interest autonomously.

Looking ahead, similar technologies are expected to power future Mars Sample Return missions and support human exploration under NASA’s Artemis program.

Extending Satellite Lifespans Through In-Orbit Servicing

With thousands of satellites currently orbiting Earth and more launching every year, ensuring their functionality over time is critical. Traditionally, satellites that became non-operational due to fuel depletion or technical issues were either abandoned or replaced. Today, machine vision systems are enabling a more sustainable approach to in-orbit servicing.

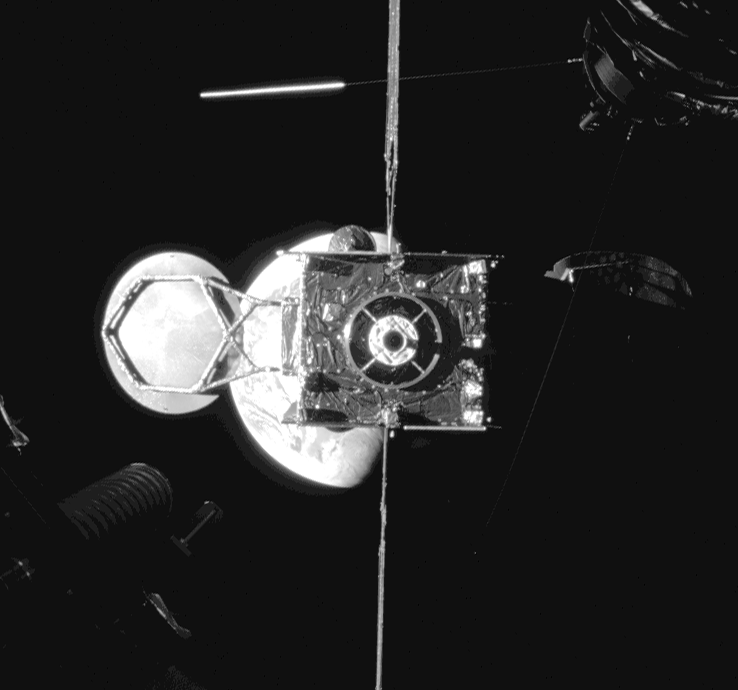

Northrop Grumman’s SpaceLogistics has led this charge with its Mission Extension Vehicle (MEV). In 2020, MEV-1 successfully docked with Intelsat 901 to provide propulsion and attitude control, extending the satellite’s lifespan by five years. This was the first successful commercial satellite-servicing mission.

Following this, Northrop Grumman is developing the Mission Robotic Vehicle (MRV), equipped with robotic arms and advanced vision systems that will install Mission Extension Pods (MEPs) onto aging satellites. These pods can autonomously attach and provide new propulsion systems. The MRV uses machine vision for docking alignment, surface inspection, and precise robotic arm control, all critical to mission safety and effectiveness.

Pioneering On-Orbit Assembly and Manufacturing

Maxar Technologies has been an innovator in vision-assisted robotics for both Earth observation and on-orbit servicing. In partnership with NASA, Maxar was tasked with developing the OSAM-1 (On-orbit Servicing, Assembly, and Manufacturing-1) mission. This ambitious effort aimed to demonstrate not just satellite refueling, but also robotic in-space construction — all guided by machine vision systems.

OSAM-1 was to use Maxar’s sophisticated robotic arms and visual navigation tools to grasp, cut through insulation, unscrew fuel caps, and transfer propellant — effectively giving satellites a second life. Although NASA ultimately canceled the mission in 2024 due to budget and schedule overruns, the underlying vision and AI technology developed during OSAM-1 will inform future servicing and assembly projects.

Reducing Space Debris with Vision-Enabled Robotics

Space debris is one of the most urgent challenges in orbital operations. There are over 100 million pieces of debris circling Earth, from defunct satellites to small fragments traveling at tens of thousands of kilometres per hour. Even a tiny shard of metal can destroy a functioning spacecraft.

Astroscale, a Japanese-headquartered company, is pioneering solutions to this crisis. Its ELSA-d (End-of-Life Services by Astroscale-demonstration) mission used magnetic docking and vision-based navigation to track and capture simulated debris. The company’s approach relies heavily on machine vision: cameras, LiDAR, and computer vision algorithms detect, identify, and determine the trajectory of space junk, enabling safe capture and deorbiting.

Their upcoming Active Debris Removal (ADR) services aim to remove large, high-risk objects in collaboration with national space agencies. Vision systems allow these spacecraft to perform complex rendezvous maneuvers, inspect target objects, and dock or manipulate them autonomously.

Enhancing Earth Observation and Environmental Monitoring

Machine vision in space isn’t just about space—it’s transforming how we understand our own planet. High-resolution optical sensors aboard satellites are paired with AI models to detect, classify, and monitor changes across Earth’s surface in near real time.

Maxar Technologies is again a leader here. Its satellites monitor deforestation in the Amazon, detect early signs of crop stress, and analyse infrastructure damage from natural disasters. The combination of multispectral imaging with AI-driven interpretation allows for faster response in crisis zones and more informed decision-making in climate and agricultural policy.

During natural disasters like the recent Myanmar earthquake, vision-equipped satellites enabled humanitarian organizations to assess infrastructure damage before ground teams could arrive. AI-based vision systems helped classify building collapses, blocked roads, and landslide zones, streamlining emergency responses.

Precision Optics

None of these machine vision capabilities would be possible without the optics behind the cameras. German lens manufacturer Schneider-Kreuznach plays a crucial role in this space. Known for its precision industrial lenses, the company designs optics that support high-resolution imaging under extreme conditions.

Their ruggedized lenses are tailored for machine vision systems used in aerospace and satellite imaging. These optics deliver low distortion, consistent sharpness, and high transmission rates, all essential for reliable vision performance in orbit. Schneider-Kreuznach lenses are compatible with various sensors, including visible and infrared, making them ideal for diverse space-based applications from surveillance to thermal mapping.

As machine vision systems evolve to operate with tighter tolerances and more autonomy, the importance of dependable, high-performance optics continues to grow.

Human-Robot Collaboration on the Moon

As NASA’s Artemis program prepares for human return to the Moon, machine vision is poised to take on an even more collaborative role, supporting astronauts in real-time.

NASA envisions robotic assistants equipped with vision systems to perform habitat inspections, navigate lunar terrain, or assist astronauts in construction and maintenance tasks. These robots will rely on computer vision to recognize tools, follow humans, and adapt to changing lighting conditions.

The European Space Agency (ESA) is also exploring surface vision systems for rovers that will scout terrain, collect samples, and assist astronauts during EVA (extravehicular activity) missions. Here, machine vision plays a dual role, enabling autonomous operations and providing visual data for mission control.

The challenges on the Moon, including high-contrast lighting, dust interference, and lack of GPS, make machine vision an essential enabler for any autonomous or semi-autonomous system.

The Future: Deep Space Vision and AI at the Edge

Machine vision is advancing quickly and future missions may take it even farther. Deep space missions, such as probes to Europa, Enceladus, or the asteroid belt, will require extreme levels of autonomy due to long communication delays and harsh conditions.

NASA’s Europa Clipper, scheduled for launch in the next few years, will use vision systems to map and analyse the icy moon’s surface, searching for potential landing sites and signs of subsurface oceans. Future missions may incorporate AI that learns and adapts in real-time, a capability that could revolutionize exploration in unfamiliar terrains.

At the same time, machine vision is becoming more efficient. Vision systems are being designed to run on edge processors, allowing AI inference directly aboard spacecraft. This reduces reliance on ground-based analysis and allows for faster, smarter decision-making in mission-critical moments.

Timeline of Machine Vision in Space: Key Milestones

- 2004 – NASA’s Spirit and Opportunity rovers use basic stereo vision for hazard avoidance

- 2012 – Curiosity rover adds more autonomous terrain assessment

- 2020 – Perseverance uses advanced visual SLAM (simultaneous localization and mapping) to drive autonomously

- 2020 – Northrop Grumman’s MEV-1 completes first satellite life extension with visual docking

- 2021 – Astroscale’s ELSA-d tests debris capture with vision systems

- 2024 – NASA ends OSAM-1, but Maxar’s tech establishes groundwork for future on-orbit repair

- 2025 and beyond – Artemis program plans machine vision-enabled robotic support on the Moon

So, from the red sands of Mars to the bustling orbits of Earth, machine vision systems have become essential to space exploration and infrastructure management. Companies like Northrop Grumman, Maxar Technologies, Astroscale, and Schneider-Kreuznach are leading the charge by integrating intelligent vision into robotics, satellites, and autonomous spacecraft.

These technologies are extending the lives of satellites, reducing debris, enhancing planetary science, and preparing us for a future where humans and machines work side-by-side in the harshest environments known to science. As machine vision continues to evolve; becoming smarter, more compact, and more adaptive.

It will be the eyes through which we explore the final frontier.