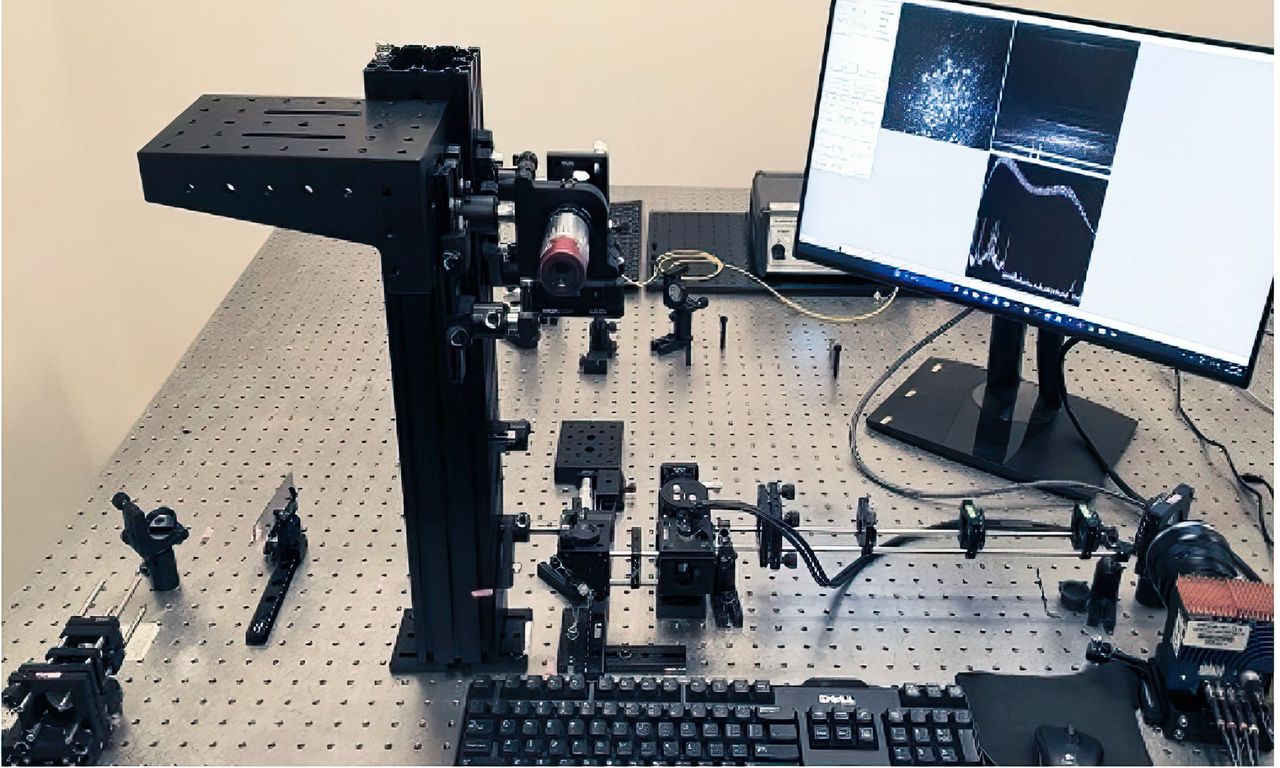

Crafting an efficient machine vision system is a complex process that requires careful consideration of various factors beyond just the camera choice.

While selecting the right camera is undoubtedly crucial, other elements, such as lighting conditions, lens selection, image processing capabilities, and software algorithms, play equally significant roles in determining the system’s overall performance and accuracy. Understanding these factors and their impact on the final image is essential for building a successful vision system that meets the specific needs of your application.

This article from Teledyne DALSA will explore the top specifications and variables to consider when constructing a complete vision system, ensuring that you achieve optimal results in your image analysis and processing tasks.

1. Environmental challenges

Images are captured all around the world in various settings. For instance, security systems are common in buildings, toll booths on freeways use embedded systems and board-level modules connect to aerial imaging drones and IP67-rated cameras for smart agriculture.

Accounting for environmental factors that might impact the system’s performance, such as temperature, humidity, and vibration is crucial for success. By implementing calibration procedures to ensure accurate measurements and consistent results, an engineering team can accommodate the variability in operating conditions.

The conditions in which a vision system operates play a crucial role in determining the necessary specifications to achieve the desired image quality. Outdoor imaging is affected by weather conditions like direct sunlight, rain, snow and heat, along with other external factors beyond control. Nevertheless, vision systems can be designed with these factors in mind. An example of this can be by selecting a camera that support automatic brightness to compensate the lighting variation or the addition of lighting to the system, additionally by designing suitable housing or selecting a IP67 rated camera to protect its sensor from harsh weather, ensuring that the camera always captures clear images.

2. Imaging sensors

Selecting the right camera for a vision system primarily hinges on the image sensor’s specifications. Understanding a camera’s capabilities boils down to knowing the type of sensor it employs. However, even if two cameras share the same sensor, they may produce distinct images with noticeable differences. Hence, considering other factors becomes crucial.

The sensor format significantly influences the optics that can be paired and the appearance of the images. Common formats, such as APS-C, 1.1-inch, 1-inch, and 2/3-inch, each have their impact. Larger sensor sizes often allow for more pixels, resulting in higher-resolution images. Nevertheless, several other specifications hold equal importance. Parameters like full well capacity, quantum efficiency, and shutter type all play essential roles in determining how well the sensor handles diverse targets in unique scenarios.

3. Optics

Once the field of view, magnification, and resolution are determined for a given application, a crucial aspect of a vision system involves using the right lens to focus on the target. In machine vision, the camera size can vary depending on the application. For larger systems, a zoom lens with adjustable focal length might be necessary to focus on different targets. On the other hand, many machine vision cameras are fixed on specific target areas, utilizing prime lenses with a set focal length.

To ensure compatibility, each lens has a specific mounting system tailored to its manufacturer and the sensor it attaches to. Common lens mounts for machine vision include C-mount, CS-mount, and M42-mount. Consequently, the first step in lens selection involves considering the required sensor specifications.

The primary specification for a lens is its focal length. As the focal length decreases, the field of view (FoV) proportionally increases. This means that with a smaller focal length, the lens can capture a broader area, resulting in reduced magnification of each element. Other valuable specifications to consider include working distance and aperture.

4. Lighting

Arguably, the most critical component of a vision system is the lighting. Despite a camera sensor’s sensitivity to low light, nothing can substitute the clarity achieved from a well-illuminated target. Moreover, lighting can adopt various forms, unveiling new insights about the target.

Area lights serve as a versatile solution, ensuring even distribution of illumination, provided the target maintains a reasonable distance from the light source to avoid creating hot spots. Ring lights, on the other hand, prove valuable when dealing with highly reflective surfaces, effectively reducing unwanted reflections. Additionally, there are other specialized lights for specific purposes: dome lights for machined metals, structured light for 3D object mapping, and even the introduction of colored light can enhance details and augment contrast. The right choice of lighting and wavelength can significantly impact the effectiveness of a vision system in capturing essential information from the target.

5. Filters

Excessive light passing through the lens can lead to a loss of critical details in the image. To tackle this issue, various filters can be employed to reduce or eliminate specific types of light. Among the primary types of color filters are dichroic and absorptive filters. Dichroic filters reflect undesired wavelengths, while absorptive filters absorb extra wavelengths, transmitting only the required ones. This enables a vision system to adjust the acceptable color spectrum as needed.

However, filters serve purposes beyond color adjustment. Neutral density (ND) filters, for instance, effectively reduce overall light levels, while polarizers remove polarized light, thus minimizing reflected light. Furthermore, anti-reflective (AR) coatings play a vital role in reducing reflections within the vision system. Such coatings find value in applications like intelligent traffic systems (ITS), where reduced glare enhances the accuracy of optical character recognition (OCR) software. By employing the right filters, a vision system can be optimized to capture essential information with utmost precision and clarity.

6. Frame rate

The speed of a camera is measured in frames per second (fps). A higher frame rate allows the camera to capture more images in the same amount of time, which has implications for each individual image. As the frame rate increases, the exposure time of each image is reduced, leading to less blur when capturing fast-moving targets, like objects on a conveyor belt. This enables sharper and more precise images.

However, shorter exposure times also mean that the camera’s sensor has less time to collect light for each exposure. To mitigate this challenge, using a larger pixel size for the sensor can be beneficial, as it helps increase the overall brightness of each image. This balance between frame rate, exposure time, and pixel size play a critical role in optimizing the camera’s performance to suit specific applications and imaging requirements.

7. Hardware and processing power

The type of hardware required for a vision system depends on the complexity of image analysis. For more difficult inspections, artificial intelligence (AI) can be useful when variability is a concern. High-performance AI systems used for deep learning require powerful hardware such as graphical processing units (GPUs) or field programmable gate arrays (FPGA). These also require large amounts of memory to handle the datasets that would be used for AI systems to adequately handle the variability in the images captured.

When imaging predictable targets, traditional computer vision algorithms can utilize more power-efficient solutions. This provides the added benefit of having a more cost-effective solution while demanding fewer resources, which can help with applications where temperature or limited power consumption are crucial factors. The combination of AI and traditional systems can ensure efficient performance in various situations without overwhelming the system.

8. Noise and gain

When a high frame rate is essential and short exposure cannot be avoided, the camera’s gain can be used to compensate for the reduced brightness. Increasing the gain boosts the sensor’s sensitivity, enabling the vision system to capture a brighter image with less light. However, relying solely on gain to address lighting challenges may not always be ideal due to the noise it introduces.

As the gain is increased, so is the noise in the image, leading to a reduction in clarity. This noise comes from various sources, such as read noise and dark current noise. While gain allows the camera to work with less light, it also compromises the image’s quality by introducing unwanted artifacts.

Finding the right balance between gain, frame rate, exposure time and other camera functions like dual exposure is crucial for achieving the best possible image quality while meeting the requirements of the specific application and lighting conditions.

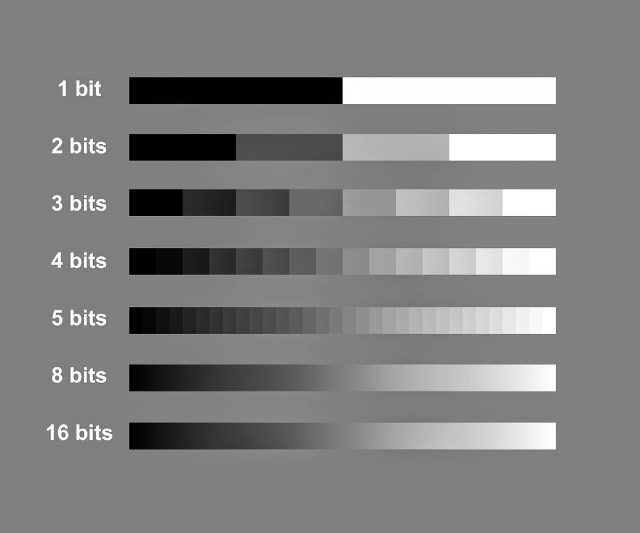

9. Bit depth and dynamic range

To accurately measure certain targets, a vision system requires a sufficiently high bit depth. A higher bit depth allows for more significant variations between pixels, enhancing the precision of measurements. On the other hand, dynamic range refers to a camera’s ability to capture details across a wide range of brightness, from the brightest to the darkest areas of the image.

Applications in Healthcare such as fluoroscopic imaging, will use 10 to 14 bits depth. In outdoor applications, bit depths higher than 8 bits are rarely necessary unless precise measurements are required, such as in photogrammetry. However, a high dynamic range can be immensely beneficial in outdoor imaging. It enables the camera to capture data even in challenging lighting conditions, such as bright sunlight causing overexposure in some parts of the image, while retaining details in shadowed areas of the target.

Increasing gain or exposure time may seem like possible solutions, but they can lead to a trade-off where capturing details in shadows reduces data in already bright sections. In contrast, a high dynamic range ensures clarity throughout the entire image, overcoming the limitations posed by extreme lighting conditions and facilitating more accurate vision system measurements.

10. Software

Even with high-end hardware, the camera’s capabilities are governed by the software it runs. Software components in a vision system are primarily divided into two fundamental forms: image acquisition and control, and image processing software.

Image acquisition and control software is responsible for gathering raw data from the camera and presenting it in an understandable format for the end user. For instance, in the case of a color camera, the pixel data is filtered through a physical Bayer filter, and the software processes this data to construct a color image.

The next stage in the software hierarchy involves handling the image data. This entails a variety of tasks in machine vision, such as inspection, analysis, and editing for applications like quality control. For example, when a target passes by the camera and needs to be tested, the software can carry out inspections and analyses to ensure the target meets the specified criteria.

The collaboration between hardware and software components is crucial in achieving optimal performance in a vision system. The software’s ability to process and interpret the image data captured by the camera is what empowers the vision system to fulfill its intended tasks effectively.

11. Data acquisition and transfer

As camera technology advances, it generates vast amounts of image data, necessitating the development of efficient data delivery methods. Camera interfaces have diversified to offer a variety of options for different imaging applications. The four most prevalent solutions are GigE, USB3, CoaXPress (CXP) and camera link high speed (CLHS). The main attributes to consider when looking into a vision system interface are the required bandwidth, including an interface that allows lossless data compression, image synchronization capability, ease of deployment, and cable length.

In conclusion, building an efficient machine vision system requires a holistic approach that goes beyond just selecting the right camera. Understanding these various elements and their interplay ensures that you can create a system tailored to your specific needs. As technology continues to evolve, staying informed about the latest advancements in machine vision components and techniques will be crucial in maintaining a competitive edge and achieving optimal outcomes.

By Manny Romero, Teledyne DALSA